What would add value to have on Wikipedia pages for clinical trials? How could they support for example in recruiting and retaining patients? How could the underlying structured data in Wikidata be useful?

I had hoped to explore these questions during an internal innovation day. However, I had the chance to look more into another interesting thing: Jupyter Notebooks. Something I have been eager to do since last summer.

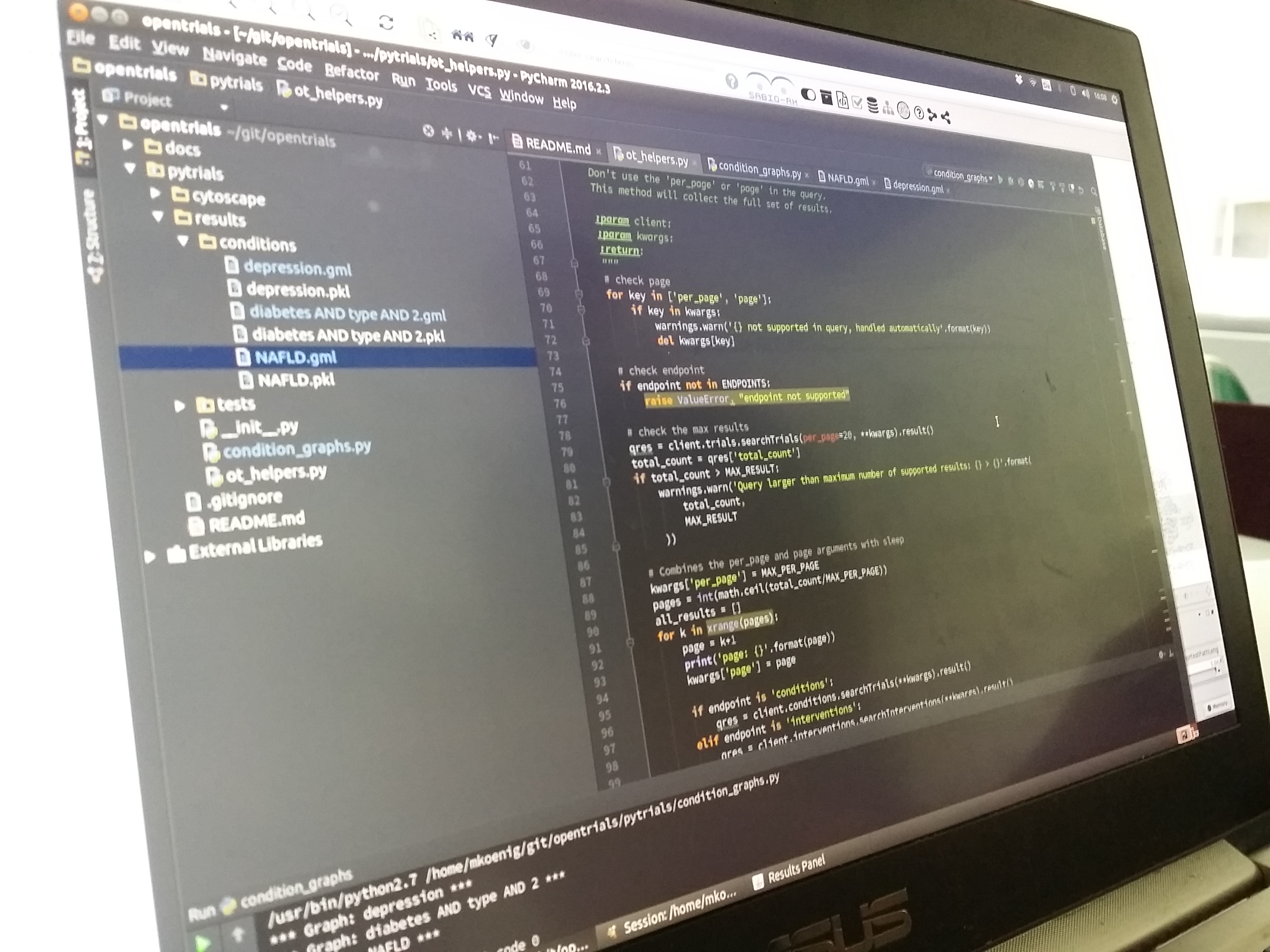

Anyhow, I hope to get involved in this interesting during 2017. I see great opportunities to both contribute and leverage from this in the work I do internally on a master list for clinical studies. I have started by engaging in two issues: Normalize study_phase values see a Issue on OpenTrials Git and also in a discussion about study identifiers (OpenTrials issue on github). Below some background to having clinical trials on Wikipedia, and in the data backbone called Wikdata, and also a note about OpenTrials.

Anyhow, I hope to get involved in this interesting during 2017. I see great opportunities to both contribute and leverage from this in the work I do internally on a master list for clinical studies. I have started by engaging in two issues: Normalize study_phase values see a Issue on OpenTrials Git and also in a discussion about study identifiers (OpenTrials issue on github). Below some background to having clinical trials on Wikipedia, and in the data backbone called Wikdata, and also a note about OpenTrials.

Today (November 2016) a few studies have Wikipedia pages e.g. Lilly's PARAMOUNT study. 16 studies, including the Lilly study, are typed as Clinical Trial (Q30612) in Wikidata (SPARQL query). The Wikidata identifier for the Lilly study is Q17148583 and its URI is

http://www.wikidata.org/entity/Q17148583

http://www.wikidata.org/entity/Q17148583

Wikidata is the backbone of Wikpedia where the entities behind Wikipedia pages are registered, such as compounds e.g. the Wikidata entity rosuvastatin (Q415159)hold some of the core structured data behind the infobox to the right on the Wikipedia page for Rosuvastatin.

|

| Wikidata entity and Wikipedia page for Rosuvastatin (also know as Crestor) |

Examples of structured data for Rosuvastatin in Wikidata: it is classified as a pharmaceutical drug (Q12140), and is a subclass of statin (Q954845) and what ATC code it has a property (P267). The structured data in Wikidata can be queries using SPARQL, a queary language for data structured as so calles RDF. The live SPARQL query to get the ATC codes for statins.

|

| Live link to the SPARQL example live via: http://tinyurl.com/hd4f3x7 |

The data for drugs and chemical compounds are sourced from Drugbank using a so called bot (see A simple way to write Wikidata bots [blog post] and Drug and Chemical compound items in Wikidata as a data source for Wikipedia infoboxes[video]).

There are plans to integrate OpenTrials info into WikiData. That is, all studies registered in CT.gov/EudraCT, available via OpenTrials Explorer. For more info about OpenTrial see my recent blog post.

A first part of that work is to develop a Data model i Wikidata for Trials. using the data elements for ClinicalTrials.gov. One example is how the NCT number, the identifier of studies, have been defined as a WikiData property (P3098) with statements about it such as the Format as a regular expression: NCT(\d{8}) and properties describing clinical trials like study phases.